For some recent customer work I had to deploy some Docker images on Kubernetes, but didn’t want to setup a full-fledged cluster (nor pay for one) as it was going to be a temporary environment anyway. I therefore dusted off an old PC I had laying around in my basement, and decided I would use it as the base of my cluster.

What I needed

- At least 3 VMs to install Kubernetes (1 master + 2 nodes)

- One VM to host GitLab + GitLab Registry

- One VM to run a GitLab Runner (for pipelines)

Setting up the host

After some back-and-forth discussions with my colleague Kenny van de Maele I went on using Centos 7 to host the VMs, mostly because I was already proficient with Centos and very happy with the platform.

After booting Centos Minimal ISO, I was able to quickly set up the host machine, then I just had to enable my network card (which was not enabled by default) using nmcli d and nmtui as described in the following blog post: https://lintut.com/how-to-setup-network-after-rhelcentos-7-minimal-installation/

Building the VMs

Google was good to me by putting this link in the top results, which was exactly what I was looking for: https://www.cyberciti.biz/faq/how-to-install-kvm-on-centos-7-rhel-7-headless-server/

Since I was going to repeat the process a few times, I just gathered all the bits from the blog post into a single script, and ran it multiple times after altering the $VM variable where the vm name is set. You could also remove it completely from the script itself, and run the script like this:

$ VM=centos7-vm1 ./build_vm.sh

D=/var/lib/libvirt/images

VM=centos7-vm1 ## vm name ##

mkdir -vp $D/$VM

cd $D/$VM

echo "instance-id: $VM" >> meta-data

echo "local-hostname: $VM" >> meta-data

cd $D/$VM

cat >user-data << EOF

#cloud-config

# Hostname management

preserve_hostname: False

hostname: $VM

fqdn: $VM.oasis.local

# Users

users:

- default

- name: eric

groups: ['wheel']

shell: /bin/bash

sudo: ALL=(ALL) NOPASSWD:ALL

ssh-authorized-keys:

- <the authorized-keys fingerprint for my private key>

# Configure where output will go

output:

all: ">> /var/log/cloud-init.log"

# configure interaction with ssh server

ssh_genkeytypes: ['ed25519', 'rsa']

# Install my public ssh key to the first user-defined user configured

# in cloud.cfg in the template (which is centos for CentOS cloud images)

ssh_authorized_keys:

- <the authorized-keys fingerprint for my private key>

# set timezone for VM

timezone: Europe/Brussels

# Remove cloud-init

runcmd:

- systemctl stop network && systemctl start network

- yum -y remove cloud-init

EOF

cd $D/$VM

cp /var/lib/libvirt/boot/CentOS-7-x86_64-GenericCloud.qcow2 $VM.qcow2

cd $D/$VM

export LIBGUESTFS_BACKEND=direct

qemu-img create -f qcow2 -o preallocation=metadata $VM.new.image 20G

virt-resize --quiet --expand /dev/sda1 $VM.qcow2 $VM.new.image

cd $D/$VM

mv $VM.new.image $VM.qcow2

mkisofs -o $VM-cidata.iso -V cidata -J -r user-data meta-data

virsh pool-create-as --name $VM --type dir --target $D/$VM

cd $D/$VM

virt-install --import --name $VM \

--memory 1024 --vcpus 1 --cpu host \

--disk $VM.qcow2,format=qcow2,bus=virtio \

--disk $VM-cidata.iso,device=cdrom \

--network bridge=virbr0,model=virtio \

--os-type=linux \

--os-variant=centos7.0 \

--graphics spice \

--noautoconsole

cd $D/$VM

virsh change-media $VM hda --eject --config

rm meta-data user-data $VM-cidata.isoIf you want to configure the VM memory and CPU count, you can do so by editing the command starting by virt-install [...] --memory 1024 --vcpus 1

Configuring bridge networking

After I was done with setting up the VMs, I realized KVM was hosting them on a separate network subnet than the rest of my LAN, which is perfectly fine, but was preventing me from connecting directly to the VMs. Since I’m using Ansible to configure my VMs, I need to be able to SSH directly into them, and I didn’t want to bother with using the host as a jump-host.

It only required 2 configuration changes on the host and adding a permanent route in my router:

Disabling the host firewall

# service firewall disable

I tried several fixes to have iptables not drop packets aimed at the VMs subnet, but unfortunately my experience editing rules was too rudimentary and I didn’t want to spend too much time securing a temporary platform hosted in my basement and only accessible from my LAN.

Enabling IP forwarding

This can be done by editing /etc/sysctl.conf and setting the parameternet.ip_forwading = 1

Afterwards, a simple call to /sbin/sysctl -p will persist the parameter.

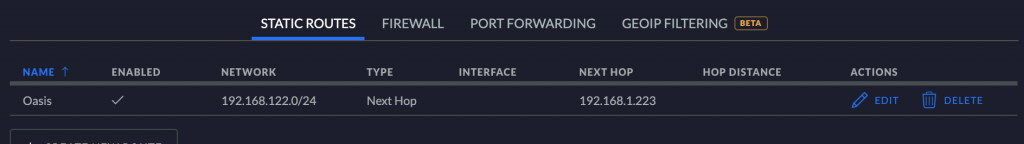

Adding the route

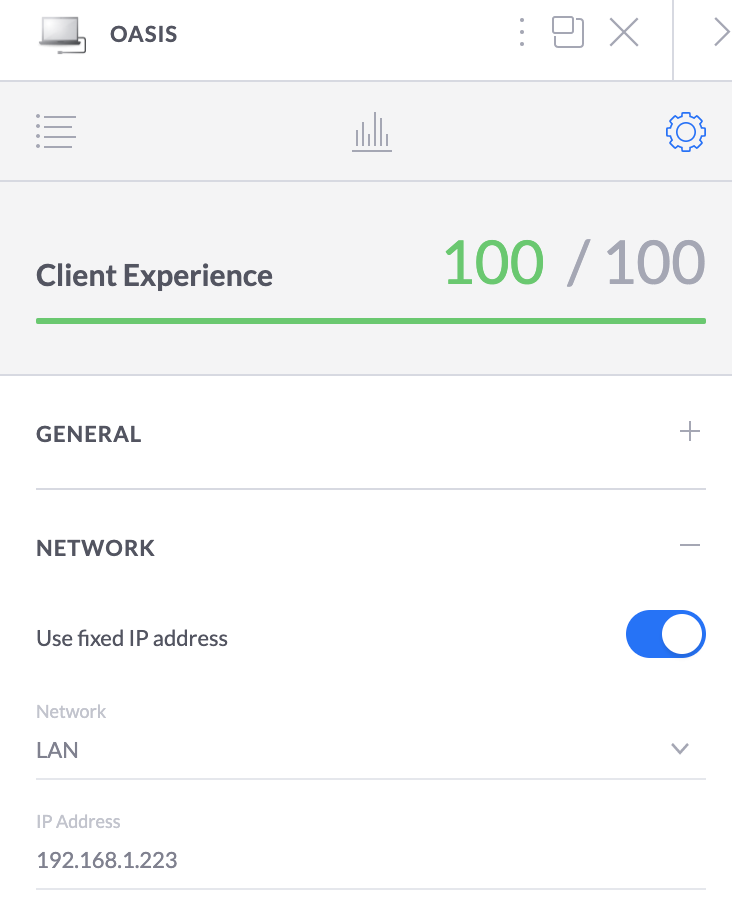

My LAN subnet is 192.168.1.0, and the VMs subnet is 192.168.122.0, so I had to use the host (which has IP 192.168.1.223 on my network) as gateway to brige both networks. Be sure to give your host a fixed IP if you want to set up a permanent route on your LAN.

I now realize this is already a lot to process, I will most likely make a second blog post on the actual Kubernetes install, stay tuned.